AI technology and its impact on privacy has become a growing concern for many countries worldwide. Recently, OpenAI's operator ChatGPT has come under investigation by Canada's Privacy Commissioner for potentially using personal information without consent. This investigation was launched in response to a complaint alleging the collection, use, and disclosure of personal information without permission.

In a statement, Privacy Commissioner Philippe Dufresne expressed his commitment to prioritizing the effects of AI technology on privacy, stating, "We need to keep up with – and stay ahead of – fast-moving technological advances, and that is one of my key focus areas as Commissioner." The commissioner's office has not disclosed the identity of the complainant, and they will not answer any questions until the investigation is complete.

This investigation comes after Italy's data protection authority, Garante, temporarily banned the use of ChatGPT's online version. Garante accused Microsoft Corp-backed OpenAI of failing to check the age of ChatGPT users and the "absence of any legal basis that justifies the massive collection and storage of personal data" to "train" the chatbot. In response, OpenAI geofenced access to ChatGPT from users in Italy.

Interestingly, Atlas VPN reports that after access was blocked in Italy, VPN downloads jumped 400%, suggesting that some people hope a VPN can bypass the ban. According to Reuters, privacy regulators in France and Ireland have reached out to their counterparts in Italy to learn more about the basis of the ban. Reuters also quoted the German commissioner for data protection telling the Handelsblatt newspaper that Germany could follow Italy by blocking ChatGPT over data security concerns.

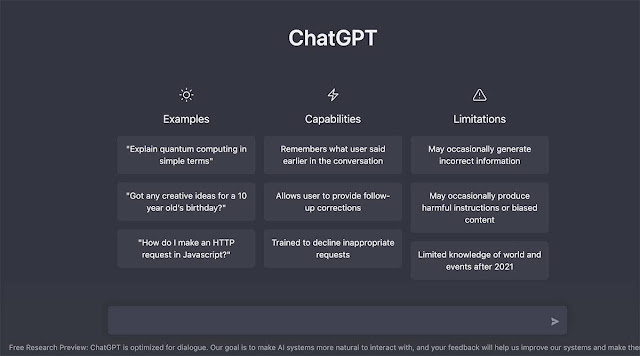

ChatGPT is an artificially intelligent system that falls into a category called generative AI. These systems can return answers to queries in a natural language, with the ability to compose paragraphs, feature articles, and even graphics. However, there are growing concerns about the potential misuse of generative AI systems, including the creation of disinformation and the loss of jobs.

Last week, tech giants Elon Musk and Steve Wozniak called for a six-month ban on developing generative AI systems until questions have been answered about their ability to create disinformation and take away jobs. However, two Gartner analysts have expressed doubts about the significant replacement of jobs by smart AI systems.

There are also concerns about the possible dangers of generative AI. Brad Fisher, CEO of Lumenova AI, a platform to help developers manage AI risks, penned a blog looking at five potential problems. The news site VentureBeat has a blog by Fero Labs CEO Berk Birand on avoiding the problems of generative AI.

In February, OpenAI had to take ChatGPT offline after reports of a bug in an open-source library that allowed some users to see titles from another active user's chat history. It is also possible that the first message of a newly-created conversation was visible in someone else's chat history if both users were active around the same time.

In response to these concerns, OpenAI has stated that they are investing in research and engineering to reduce both glaring and subtle biases in how ChatGPT responds to different inputs. They also believe that there is room for improvement in other dimensions of system behavior, such as the system 'making things up.' Feedback from users is invaluable for making these improvements.

Stephen Almond, executive director for regulatory risk at the UK Information Commissioner's Office, has also expressed the importance of AI developers reflecting on how personal data is being used.

0 Comments